K-Means Clustering Method

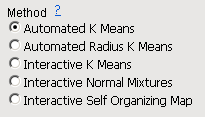

Use the radio buttons to select the method used for joining the clusters. The Automated K Means method is selected by default.

Available options are described in the table below:

|

Clustering Method |

Description |

|

Automated K Means |

Choose this method to compute a fixed number of clusters. This method calls SAS/STAT PROC FASTCLUS. Refer to the PROC FASTCLUS documentation for additional details. |

|

Automated Radius K Means |

Choose this method to use the Correlation Radius for Clustering parameter to determine the number of clusters. This method calls SAS/STAT PROC FASTCLUS. Refer to the PROC FASTCLUS documentation for additional details. |

|

Interactive K Means |

This method performs an iterative alternating fitting process to form the number of specified clusters. The K-Means clustering method first selects a set of n points called cluster seeds as a first guess of the means of the clusters. Each observation is assigned to the nearest seed to form a set of temporary clusters. The seeds are then replaced by the cluster means, the points are reassigned, and the process continues until no further changes occur in the clusters. The k-means approach is a special case of a general approach called the EM algorithm, where E stands for Expectation (the cluster means in this case) and the M stands for maximization, which means assigning points to closest clusters in this case. This method is intended for use with larger data tables, from approximately 200 to 100,000 observations. With smaller data tables, the results can be highly sensitive to the order of the observations in the data table. |

|

Interactive Normal Mixtures |

Normal mixtures is an iterative technique, but rather than being a clustering method to group rows, it is more of an estimation method to characterize the cluster groups. Rather than classifying each row into a cluster, it estimates the probability that a row is in each cluster.1 The normal mixtures approach to clustering predicts the proportion of responses expected within each cluster. The assumption is that the joint probability distribution of the measurement columns can be approximated using a mixture of multivariate normal distributions, which represent different clusters. The distributions have mean vectors and covariance matrices for each cluster. Hierarchical and k-means clustering methods work well when clusters are well separated, but when clusters overlap, assigning each point to one cluster is problematic. In the overlap areas, there are points from several clusters sharing the same space. It is especially important to use normal mixtures rather than k-means clustering if you want an accurate estimate of the total population in each group, because it is based on membership probabilities, rather than arbitrary cluster assignments based on borders. |

|

Interactive Self Organizing Map |

The Self Organizing Map method forms clusters in a particular layout on a cluster grid, such that points in clusters that are near each other in the SOM grid are also near each other in multivariate space. This grid structure helps interpret the clusters in two dimensions: clusters that are close are more similar than distant clusters. |