The

Mixed Model Power

process assists you in the planning of your experiments. Starting with an exemplary experimental design data set and parameter settings for a relevant

mixed models

, it enables you to calculate power curves for a range of Type 1 error probabilities (

alpha

). In other words, this process helps you decide how big an experiment you need to run in order to be reasonably assured that the true effects in the study (change in gene

expression

, for example) are deemed statistically significant. Conversely, this process also enables you to calculate the statistical

power

of an experiment, given a specified

sample size

. This process is typically run before you perform your experiment, and it helps to have conducted a pilot study in order to determine reasonable values for

variance

components.

Mixed Model Power

computes the statistical power of a set of one-degree-of-freedom

hypothesis tests

arising from a mixed linear model. You specify an experimental design file, parameters for relevant PROC MIXED statements (including fixed values for the variance components and

ESTIMATE statements

), and ranges of values for

alpha

and effect sizes. The process outputs a table of power values calculated using a noncentral t-distribution.

|

•

|

The

Experimental Design Data Set (EDDS)

.

It must include all relevant design

variables

of the experiment for which you want to compute power. The sample size equals the number of rows in this data set.

|

|

•

|

The file containing

PROC MIXED ESTIMATE

statements. ESTIMATE statements are used to specify linear hypotheses of interest that are valid for each specified

fixed effects

model. Distinct power values are computed for each hypothesis test. See

Estimate Builder

for more details.

|

For detailed information about the files and data sets used or created by JMP Life Sciences software, see

Files and Data Sets

.

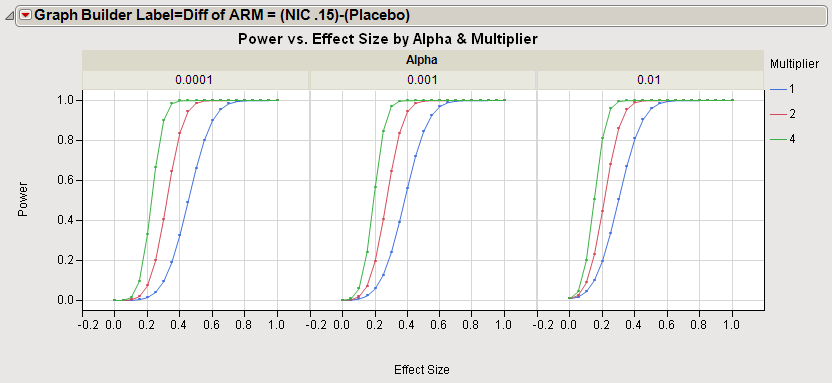

The output of the

Mixed Model Power

process includes one output data set listing the

t-statistics

and associated power values for each level of

alpha

(

not

shown) and the power curves shown

below

.

Effect sizes (

log

2

differences) are plotted along the x-axes. Power is plotted along the y-axis of each plot. The greater the power, the higher the probability of rejecting the

null hypothesis

when the observed difference is real. Note that, as expected, power increases for all effects as the effect size increases. In other words, the greater the difference due to the effect, the more likely you are to successfully conclude that the observed difference is real.

To compute power for a new design, use

to generate the design of interest, save the table as a SAS data set, and rerun

Mixed Model Power

using the new design as the EDDS.