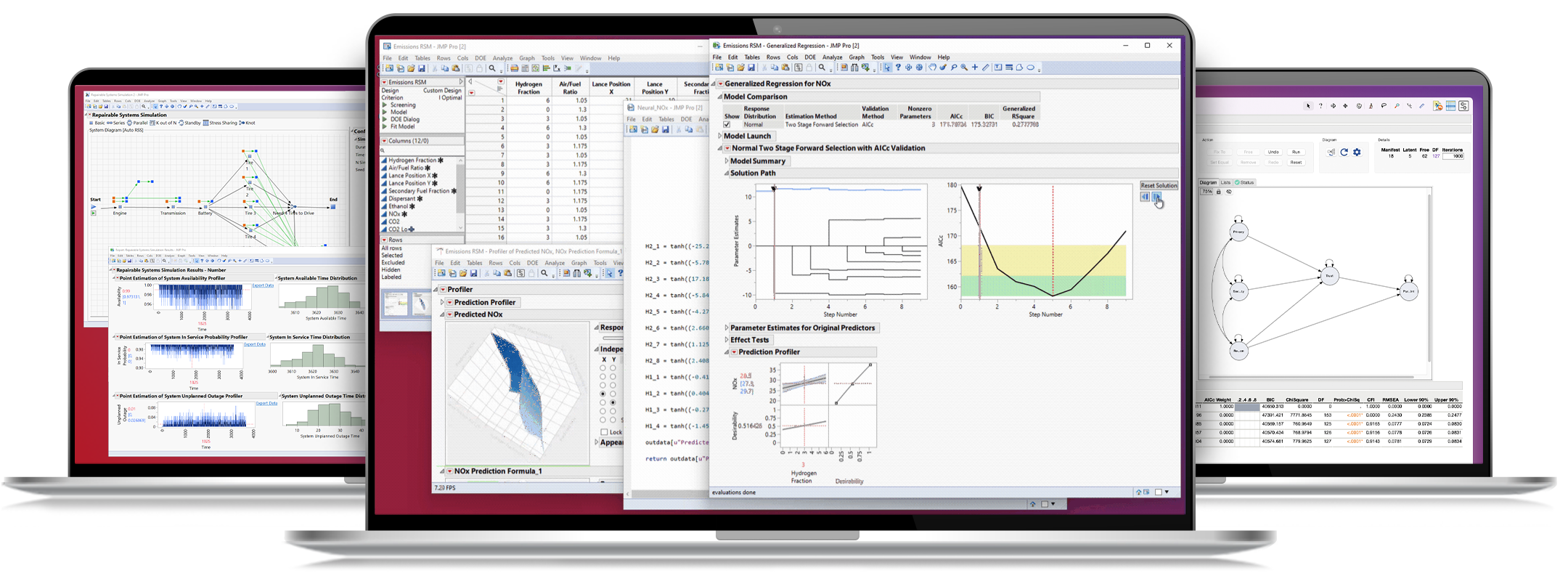

See JMP Pro in action

All the power. None of the complexity.

Build better models

Without the right predictive analytics tools, building a model to predict what will happen with new customers, new processes, or new risks becomes much more difficult. JMP Pro offers a rich set of algorithms that lets you build better models.

Improve your predictive models to help make better decisions

Enhance the power of your predictive models with various types of data, including unstructured text data you’ve collected – repair logs, engineering reports, customer survey response comments, and more. Use JMP Pro to organize and transform data into usable additions to your predictive models, enabling more confident decision making.

Screen, fit, and compare multiple models more efficiently

Handling your models doesn’t have to be painful – JMP Pro makes it easy to find the best fit to your data with model screening. Easily build candidate models then profile, compare, and generate score code in C, Python, JavaScript, SAS, or SQL.

Going from low knowledge to high knowledge – that is what design engineering and process engineering are all about. You’re continuously delivering knowledge to the company.

Seagate

Ted Ellefson, Managing Principal Engineer in Mechanical R&D

The Key Features of JMP Pro

Predictive Modeling and Cross-Validation

Use the JMP Pro set of rich algorithms to build and validate your models more effectively.

Model Screening and Comparison

Build a variety of models and determine the best one for the problem you are trying to solve.

Formula Depot and Score Code

Organize your models and save model score code in SAS, C, Python, JavaScript, or SQL.

Structural Equation Modeling (SEM)

Use this framework to fit a variety of models, including confirmatory factor analysis, path models, measurement error models, and latent growth curve models.

Modern Modeling

Use new modeling techniques, including Generalized Regression with penalized methods, to build better models, even with challenging data.

Functional Data Analysis

Create models of data that are functions, signals, or series with Functional Data Explorer (FDE).

Reliability Block Diagrams

Easily fix weak spots in your system and be better informed to prevent future system failures.

Repairable Systems Simulation

Simulate system repair events to understand downtime, number, and cost of repairable events.

Covering Arrays

Design your experiment to maximize the probability of finding defects while minimizing cost and time.

Term Selection and Sentiment Analysis

Use your unstructured data to identify terms associated with a response and to explore basic sentiment.

Mixed Models

Analyze data involving both time and space, where multiple subjects are measured or groups of variables are correlated.

Uplift Models

Predict consumer segments most likely to respond favorably to an action, allowing targeted marketing decisions.

Generalized Linear Mixed Models (GLMM)

Fit models with both non-Gaussian response variables and random design effects.